RGB↔X: Image decomposition and synthesis using material- and lighting-aware diffusion models

Abstract

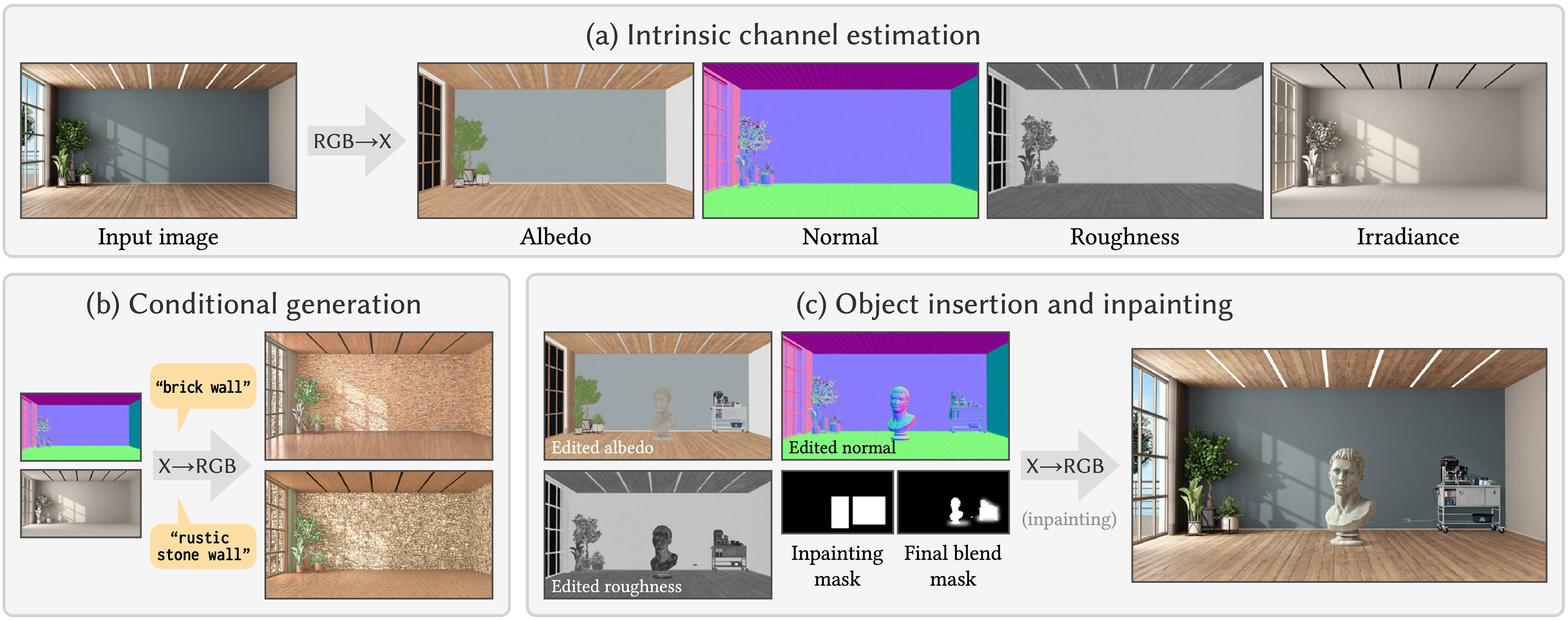

The three areas of realistic forward rendering, per-pixel inverse rendering, and generative image synthesis may seem like separate and unrelated sub-fields of graphics and vision. However, recent work has demonstrated improved estimation of per-pixel intrinsic channels (albedo, roughness, metallicity) based on a diffusion architecture; we call this the RGB→X problem. We further show that the reverse problem of synthesizing realistic images given intrinsic channels, X→RGB, can also be addressed in a diffusion framework. Focusing on the image domain of interior scenes, we introduce an improved diffusion model for RGB→X, which also estimates lighting, as well as the first diffusion X→RGB model capable of synthesizing realistic images from (full or partial) intrinsic channels. Our X→RGB model explores a middle ground between traditional rendering and generative models: We can specify only certain appearance properties that should be followed, and give freedom to the model to hallucinate a plausible version of the rest. This flexibility allows using a mix of heterogeneous training datasets that differ in the available channels. We use multiple existing datasets and extend them with our own synthetic and real data, resulting in a model capable of extracting scene properties better than previous work and of generating highly realistic images of interior scenes.

Downloads and links

- paper (PDF, 39 MB)

- supplemental results – RGB→X decomposition and X→RGB synthesis on synthetic and real data

- fast-forward video (MP4, 16 MB)

- project page

- Arxiv preprint

- code – reference implementation

- citation (BIB)

Media

Fast-forward video

BibTeX reference

@inproceedings{Zeng:2024:RGBX,

author = {Zheng Zeng and Valentin Deschaintre and Iliyan Georgiev and Yannick Hold-Geoffroy and Yiwei Hu and Fujun Luan and Ling-Qi Yan and Miloš Hašan},

title = {RGB↔X: Image decomposition and synthesis using material- and lighting-aware diffusion models},

booktitle = {ACM SIGGRAPH 2024 Conference Proceedings},

year = {2024},

doi = {10.1145/3641519.3657445},

isbn = {979-8-4007-0525-0/24/07}

}