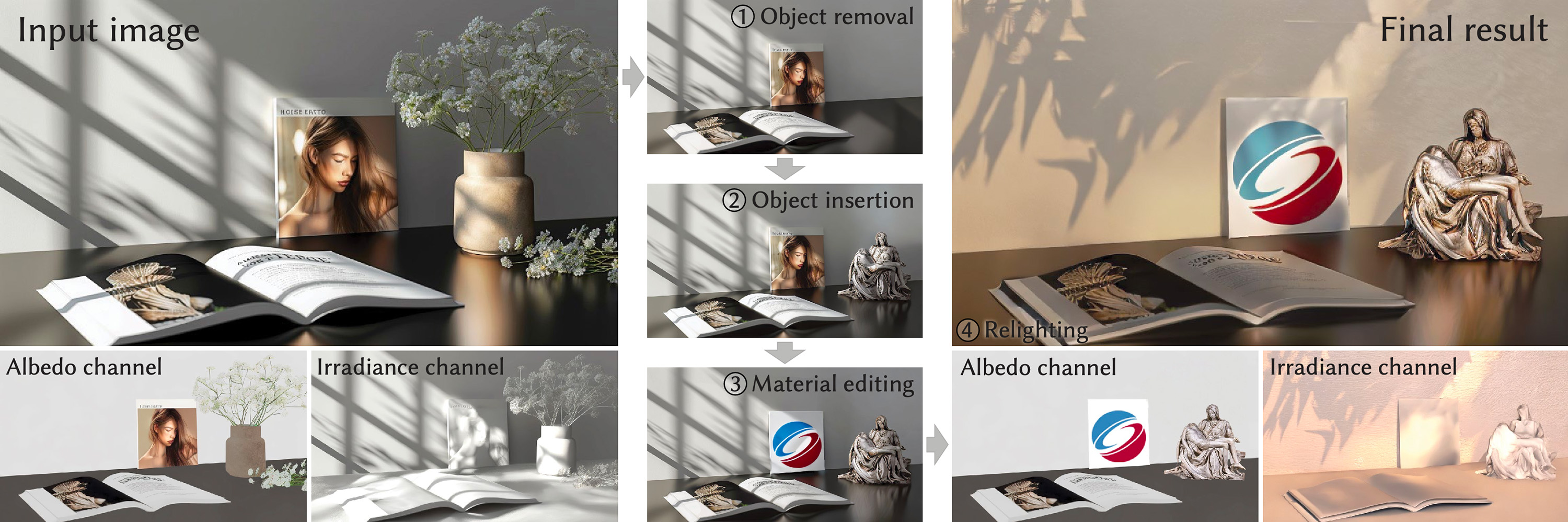

IntrinsicEdit: Precise generative image manipulation in intrinsic space

Abstract

Generative diffusion models have advanced image editing by delivering high-quality results through intuitive interfaces such as prompts, scribbles, and semantic drawing. However, these interfaces lack precise control, and associated editing methods often specialize in a single task. We introduce a versatile workflow for a range of editing tasks which operates in an intrinsic-image latent space, enabling semantic, local manipulation with pixel precision while automatically handling effects like reflections and shadows. We build on the RGB↔X diffusion framework and address its key deficiencies: the lack of identity preservation and the need to update multiple channels to achieve plausible results. We propose an edit-friendly diffusion inversion and prompt-embedding optimization to enable precise and efficient editing of only the relevant channels. Our method achieves identity preservation and resolves global illumination, without requiring task-specific model fine-tuning. We demonstrate state-of-the-art performance across a variety of tasks on complex images, including material adjustments, object insertion and removal, global relighting, and their combinations.

Downloads and links

- paper (PDF, 11 MB)

- supplemental document (PDF, 25 MB)

- Arxiv preprint

- project page

- code – reference implementation

- citation (BIB)

BibTeX reference

@article{Lyu:2025:IntrinsicEdit,

author = {Linjie Lyu and Valentin Deschaintre and Yannick Hold-Geoffroy and Miloš Hašan and Jae Shin Yoon and Thomas Leimkühler and Christian Theobalt and Iliyan Georgiev},

title = {IntrinsicEdit: Precise generative image manipulation in intrinsic space},

journal = {ACM Transactions on Graphics (Proceedings of SIGGRAPH)},

year = {2025},

volume = {44},

number = {4},

doi = {10.1145/3731173}

}